Training and Finetuning of MACE models¶

Version: X-2025.06

In this introductory tutorial, you will learn how to use QuantumATK to

Train custom MACE machine-learned force-fields (ML FFs) via Python scripts which can be used for molecular dynamics (MD), geometry optimization, and other force-field-based simulations - in this tutorial for crystal and amorphous TiSi and TiSi2 structures.

Validate the quality of the trained MACE models.

Load a trained MACE model into QuantumATK in a workflow via the MachineLearnedForceFieldCalculator block.

In recent years, advancements have been made in the world of Machine Learned Force Fields (ML-FFs) with the emergence of universal foundation models. One of the current state-of-the-art potential architectures yielding a good trade-off between performance and accuracy is MACE [1] - a message-passing Neural Network (MPNN) from which prominent universal foundation model versions are preinstalled and available for use in QuantumATK. While such universal models can constitute an excellent calculator in its own right, it is trained to perform reasonably well within a very broad domain. This means that it has trained on a large amount of data, and that the model has a size that limits the inference speed. For problems within a limited application domain, it is also possible to train custom MACE models within QuantumATK. MACE models can be trained either from scratch, which can be used to balance model size and simulation speed against accuracy, or by finetuning foundation models to achieve even better accuracy in a specific application domain while building on top of previous knowledge.

The amount of data needed to train a MACE model depends on the complexity of the

system and the kind of training being conducted. In general, more data is usually preferred if

training from scratch. However, for fine-tuning from a foundation model on the order of 100

structures can be enough to reach good accuracy in a specific domain. In this tutorial, both

approaches will be presented using a small dataset containing a mix of 241 crystal and amorphous

structures of TiSi and TiSi2. This data was used to train a Moment Tensor Potential

in a different tutorial. The dataset is available for

download: c-am-TiSi-TrainingSets.hdf5.

It should be noted that

this system is chosen for demonstration purposes. For simple systems, the accuracy advantage of MACE over

MTP is not significant. Training an MTP model may be the better choice here, due to its advantages in

simulation speed. MACE is in general expected to be capable of reaching higher accuracy in complex

systems where more traditional ML FFs face limitations.

Note

It is in general advised to do all training of MACE models on GPUs using 1 process per GPU. It is similarly recommended to run inference with trained MACE models on GPUs to utilize the model efficiently. Instructions on how to run on GPUs can be found in the technical notes.

In order to train a MACE model, we utilize the MACEFittingParameters and MachineLearnedForceFieldTrainer objects. The parameters object is used to set up all training parameters, while the trainer object is used to perform the training. In the reference manual, all available parameters are listed and explained. In this tutorial, only a subset of the parameters with high impact on the model training and accuracy are included. When training from scratch, the most important parameters are the maximum L value, which controls the symmetry (equivariance) of the messages sent within the model architecture, the distance cutoff, which controls the cutoff radius up to which the local environment should be encoded for each node, and the number of channels, which controls the number of features the local environment of each node is described by.

Note

In the Version: X-2025.06 release, MACE model training modules are only available in scripting. Workflow Block support in the GUI is not yet included.

Training a MACE model from scratch¶

We first set up a script to train a small MACE model from scratch. The script is available for download:

Train_model_from_scratch.py.

The script first loads the training data

to be used for training. It is important that the training data already includes energy, forces,

and (if desired) stress labels, as the MACE training framework does not perform these calculations.

The data should be stored in one or more TrainingSet objects, which are then appended to a

single list.

Next, the script sets up the calculator to be used for calculating the isolated atom energies for

the atom types featured in the training set data. It is important that the calculator is consistent

with the precalculated training data. The calculator can either be loaded from a file if saved as

part of the preparation of the training data or it can simply be redefined in the training script

directly using the same settings.

Thereafter, the script sets up a MACEFittingParameters object that defines the model and hyperparameters for the MACE training.

Finally, the script sets up the MachineLearnedForceFieldTrainer object that coordinates the other objects and is used to perform the training.

The script trains a single MACE model on 80% of the 241 provided structures and validates the model on the remaining 20% in each training epoch. Various parameters are set to tune the model size, train and inference speed, as well as the accuracy of the model. The 3 most important parameters introduced in the previous section are set to the following values:

max_l_equivariance = 0distance_cutoff = 5.0number_of_channels = 8

Here, the number_of_channels parameter is set to 8, which is a very low setting. Higher values

will be explored in a small screening study for this parameter later in the tutorial.

Here, the max_l_equivariance parameter is set to 0, which corresponds to MACE models named or

referred to as “small” or “L0” MACE models. Similarly, max_l_equivariance = 1 corresponds to

“medium”/”L1” models and max_l_equivariance = 2 corresponds to “large”/”L2” models. This is

the parameter that influences computational speed and accuracy of a model the most.

The remaining parameters in the script are accompanied by short explanatory comments in the script. Additional parameters exist and are described in the MACEFittingParameters reference manual.

The main role of the MachineLearnedForceFieldTrainer object is to coordinate the training process. It takes as input the training data, the calculator, and the fitting parameters. Additionally, it takes care of creating the train-test split if desired. For MACE training it is not required to have a test set. A test set can be used to calculated RMSE values for the energies, forces, and stress on data that has never been seen before at the end of the training. This is therefore different from the validation set, which is created by taking a fraction (editable in the MACEFittingParameters) of the training data and using it to validate the model during training. By default, the validation set is 20% of the training data and the validation is run after each training epoch.

In order to run the training, the script is loaded in the QuantumATK Editor from where a Job can be created and submitted via the Job Tool. Instructions how to run on GPUs can be found in the technical notes. Using a single node, with a single MPI process, and a single GPU (Nvidia A100) requested in the Job tool the training script took approximately 9 minutes to run when creating this tutorial.

Before looking into the results of the training it is also presented how finetuning of existing models can be performed.

Naive Finetuning of foundation MACE models¶

Finetuning a foundation model can improve accuracy of the model for a specific domain while benefitting from the general capabilities of the original model. Due to the nature of finetuning, the architecture of the model is fixed and therefore cannot be changed into a smaller or larger model. The size is tied to the foundation model. Finetuning has the advantage that general interactions between types of atoms are already mapped quite well and that for specific domains at specific levels of theory these interactions only have to be finetuned - not learned from scratch which can be a lengthy process for large amounts of data.

In QuantumATK finetuning is done by setting the foundation_model_path parameter in the

MACEFittingParameters object to the path where the foundation model is located. This model can be

any MACE .model file trained previously. In this tutorial we will use

the mace-mp-0b3-medium model [2], one of the most recent,

high quality MACE models at the time of the Version: X-2025.06 release, available at:

mace-mp-0b3-medium.model.

Note

Finetunable foundation models are not included in the QuantumATK installation.

Therefore, any universal MACE model to be finetuned must first be downloaded

and then provided to the foundation_model_path parameter. Due to the size of the file, it

may be beneficial to store the model in a location on a cluster and have the

MACEFittingParameters reference the specific cluster location instead of copying the

model from the local machine each time a job referencing the model is submitted.

The training script for finetuning the model with the TiSi and TiSi2 dataset is

available for download: Train_model_with_naive_finetuning.py.

The script is similar to the one used for training from scratch. The main difference is the

foundation_model_path parameter pointing to the location of the foundation model in the

MACEFittingParameters object. When running the script, ensure that the path to the

foundation model is updated to the location of the model on your system or the cluster where you

will run the training.

During naive finetuning, the foundation model is trained further on new data for a specified number of epochs. While this can significantly improve accuracy within the new, limited domain, it also carries the risk that some of the general knowledge encoded in the original foundation model may be forgotten. This effect can be more pronounced if the new dataset is very different from the original training data. Attention to final model quality and the number of epochs to train for is therefore important to balance adaptation to new data with retention of the foundation model’s broader capabilities.

Using a single node, with a single MPI process, and a single GPU (Nvidia A100) requested in the Job tool the training script took approximately 10 minutes to run when creating this tutorial.

Multihead Finetuning of foundation MACE models¶

Multihead Finetuning, or Multihead Replay Finetuning, is a more refined approach to finetuning. In this approach, a foundation model also forms the basis of the training. Contrary to the Naive Finetuning approach, not only the foundation model is used, but also the training data used to train the foundation model. In practice this results in a model training on several model “heads” with separate readout layers but otherwise shared model weights. The first model head is the pretrained model head, which will be retrained on a subset of the original training data. How much of the original training data is used can be customized but by default 10000 original structures are used. The other model head will be the model head for the new training data. During training, non-readout weights are trained on both sets of training data, and therefore the model will better retain knowledge about the original training data. This minimizes the risk of the model straying away from key patterns learned from the original data while still allowing the model to learn from the new training data.

Since the Multihead Finetuning approach requires both a foundation model as well as the original

training data, more parameters need to be set in the MACEFittingParameters object. In

order to activate the Multihead version, the use_multiheads_finetuning parameter is activated.

Furthermore, the pretrained_head_train_file parameter is set in one of two ways. If the original

model is not one of the MP MACE models, the parameter is given the path to the original training

data (in xyz file format). If the model is one of the MP MACE models, the parameter is simply set

to pretrained_head_train_file='mp'. In this case, the original training data as well as a descriptors file

need to be downloaded and local/cluster paths need to be provided to the script. The data used to

train the MACE MP foundation models [2][3] used in

this tutorial is available at:

https://github.com/ACEsuit/mace-foundations/releases/download/mace_mp_0b/mp_traj_combined.xyz

https://github.com/ACEsuit/mace-foundations/releases/download/mace_mp_0b/descriptors.npy

These files, if Multihead Finetuning with a MP MACE model, have to be supplied via the mp_data_path

and mp_descriptors_path parameters in the MACEFittingParameters object. Furthermore, the

foundation_model_path parameter has to be set in the same way as for the Naive Finetuning.

The training script for Multihead Finetuning is available for download:

Train_model_with_multihead_finetuning.py.

The script is similar to the one used for training from scratch apart from the extra parameters

described. In order to run the script, ensure that the paths to the foundation model, MP data, and

the descriptors files are correct and updated to where you put the files on your machine/cluster. It is

recommended to store them on the cluster where training will happen to reduce the time it takes to

transfer files from the Job Tool upon submission.

Using a single node, with a single MPI process, and a single GPU (Nvidia A100) requested in the Job tool the training script took several hours to run when creating this tutorial. It may be advantageous to not run this script but wait for a multi-GPU version in the beginning of the next section also training this as well as other models in less time.

For any MACE training, the job can be monitored via its log output while running. Here, loss scores

and error metrics (RMSE by default) for energy, forces, and stress are reported on the validation

set after each epoch. After the end of the training, the error metrics are reported for

the best trained model on the train and validation set - as determined by the model with lowest loss score.

For the Multihead Finetuning, the loss and error metrics are also reported for the model head

retraining on the original data. The default model head is associated with the new training

data and the pt_head model head is associated with the original training data. The train

and valid prefixes indicate whether the metrics are reported for the training or the validation set.

As an example of the log output, the following error metrics are obtained as the Multihead

Finetuning training script finalizes:

config_type |

RMSE E / meV / atom |

RMSE F / meV / A |

relative F RMSE % |

RMSE Stress (Virials) / meV / A (A^3) |

train_default |

15.9 |

94.2 |

7.94 |

14.8 |

train_pt_head |

71.2 |

77.8 |

5.86 |

9.2 |

valid_default |

17.3 |

100.8 |

9.47 |

13.1 |

valid_pt_head |

46.2 |

87.3 |

4.54 |

8.5 |

The RMSE values for the validation set are in general slightly higher than for the training set for

the new model head, _default.

In the _pt_head model head, the picture is a little more mixed, but the validation error for the forces, which has

the largest weight, is also larger than the training error.

This behaviour is expected because the

model only updates its parameters based on the training data during the training. The validation set

is used for monitoring the model performance during training without impacting the model parameters.

The combination of train and validation error metrics indicates the overall magnitude of the model accuracy.

A true measure of accuracy on completely unseen data is however necessary in order to truly assess the predictive

capabilities of the model. When training a model from scratch or with Naive Finetuning, only two rows of

error metrics will be reported since only one model head is trained. For this Multihead Finetuning example,

four rows are obtained due to training on two model heads. For most practical purposes, mainly the

_default head is important to obtain good error metrics for since this is the model head that will

automatically be extracted to a .qatkpt model file for use in QuantumATK when using Multihead Finetuning.

Small study with additional models trained from scratch¶

In order to give a slightly more nuanced view of how changing MACE training parameters can affect

accuracy and performance of a model trained from scratch, before testing the models on unseen data,

a few more models will be trained from scratch with number_of_channels varying between 8 and 128

while keeping the distance_cutoff of 5 Angstrom and the max_l_equivariance of 0 (corresponding

to small/”L0” MACE models) fixed. This is all done in one training script where the various models

defined using MACEFittingParameters objects that have been conveniently generated by

calling the previous MACEFittingParameters object in the training series to copy its

settings while only setting the parameters to be updated. In this way we can easily generate new

model training parameters with increasing numbers of channels. If the models from the previous sections have not yet

been trained, they can also conveniently be generated by use of this last training script, available for download here:

Train_mace_models.py.

Instead of running the training on a single process and a single GPU, we this time utilize that the

implementation of MACE training in QuantumATK allows distributed training. By setting the parameter

distributed_training=True, the training can be run on multiple GPUs (with 1 MPI process per GPU).

In order to run the script, ensure that the script is updated

with the path to the MACE model file to finetune from as well as the paths to the original training data files.

Tip

If using multiple GPUs it is in general recommended to use the same number of MPI processes and GPUs and no threading. The training should be kept to a single node. Running training in a distributed setup can result in potential error messages not always being easy to interpret/available. If coming across errors in distributed training, it can therefore be helpful to attempt running the training on a single process and GPU to retrieve the underlying error message.

Running the script on 8 MPI processes and 8 GPUs (Nvidia A100), the training of the 7 models took approximately 2 hours in total.

Validation of trained models¶

With the models trained we can now validate the models by comparing the energies and forces of the

MACE models with reference DFT data for unseen data generated with Molecular Dynamics with the reference

DFT calculator. This data, containing a mix of crystal and amorphous structures, is stored in the file

md_example_results.hdf5.

With a different script, run_benchmarks.py, we can load the trained

models and the reference data to compare the energies and forces of the MACE models with the

reference DFT data, as well as run performance benchmarks of an approximately 5000-atom structure

for 1000 MD steps. The output of the script is json files containing accuracy RMSE values for the

energy and the force predictions as well as the performance benchmark results for the time taken per atom

per step in the Molecular Dynamics simulation for each model type. On top of comparing the 7 models trained with scripts in the

tutorial, benchmarks for the foundation model - included as the TorchX_MACE_MP_0b3_medium

potential set in the Version: X-2025.06 release - and the MTP - trained in the related MTP tutorial

using the same training data - are also included in the benchmarks to

increase the amount of insight gained. The benchmarking script was run

using a single node, with a single MPI process, and a single GPU (Nvidia A100).

The results of the benchmarks in the json files are shown in the figures below.

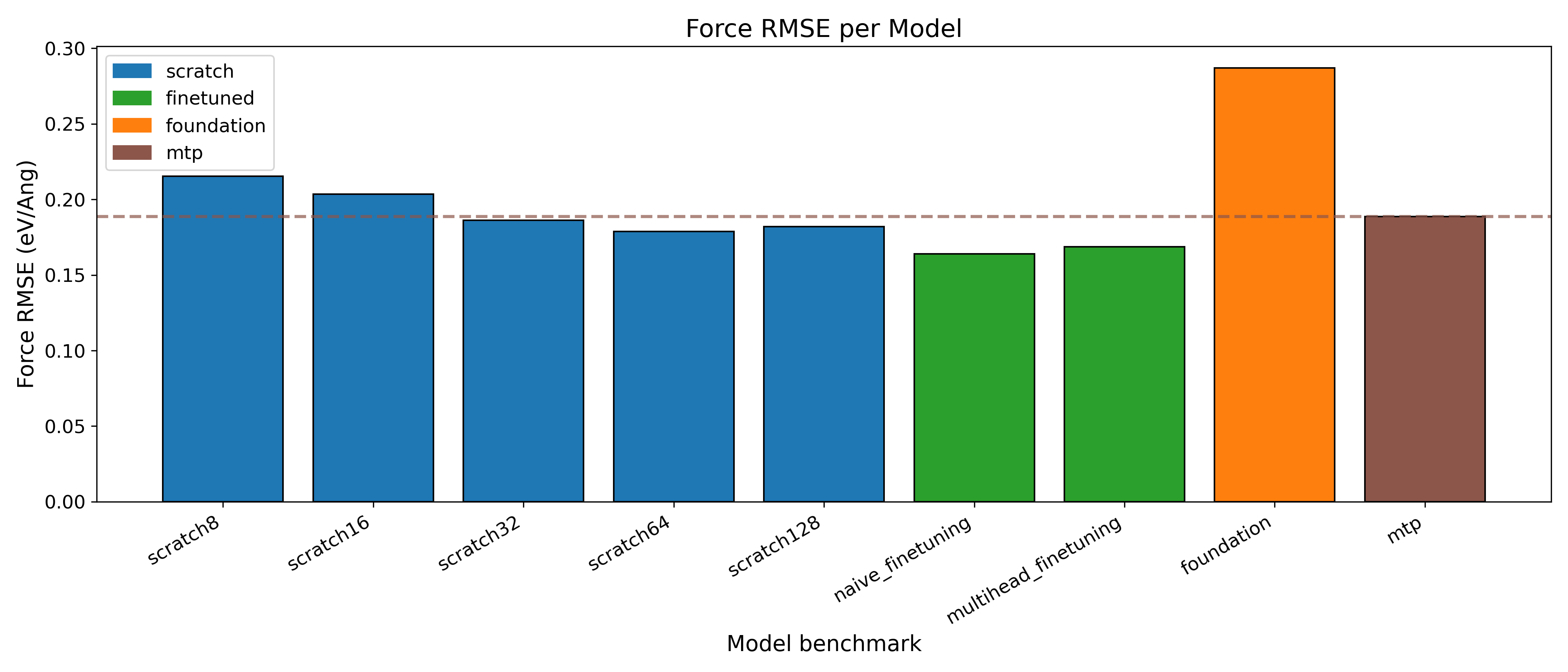

The two plots above provide a comparison of the different MACE models trained from scratch, the finetuned models, the foundation model, and the MTP model. The forces RMSE plot show that increasing the number of channels in the MACE models trained from scratch leads to a consistent improvement in accuracy. Based on this plot, it is evident that the finetuned MACE models are the best performing models achieving higher accuracies than the MTP, the foundation model, and the models trained from scratch.

Note that the obtaiend accuracy (for both energy and other predicted quantities)

can fluctuate depending on the initial weights. In this tutorial each model was only trained once,

with a given random seed, and therefore the accuracy of the models is not guaranteed to be the

best possible. In a more systematic study, one might want to run several training runs with

different initial weights (by setting another random_seed in MACEFittingParameters)

in order to increase the likelihood of the best possible model being created.

For a brief comparison between the train/validation errors and the test errors, we will swiftly zoom in on the forces error metrics (which have the highest weight - and therefore importance - in the loss function) of the multihead finetuned model metrics. In the error metrics table from the output log in the Multihead Finetuning of foundation MACE models section, the train and validation errors for the forces are 94.2 meV/Å and 100.8 meV/Å, respectively. The test error for the multihead finetuned model in the plot above is 168.6 meV/Å. This indicates that the model generalizes reasonably well to unseen data, but with a somewhat lower actual accuracy than reported on the train/validation set. The more training data is included, the more likely it is that the model will generalize well to unseen data. Similarly, the more diverse training data included, the higher the accuracy of the model is likely to become across all error metrics.

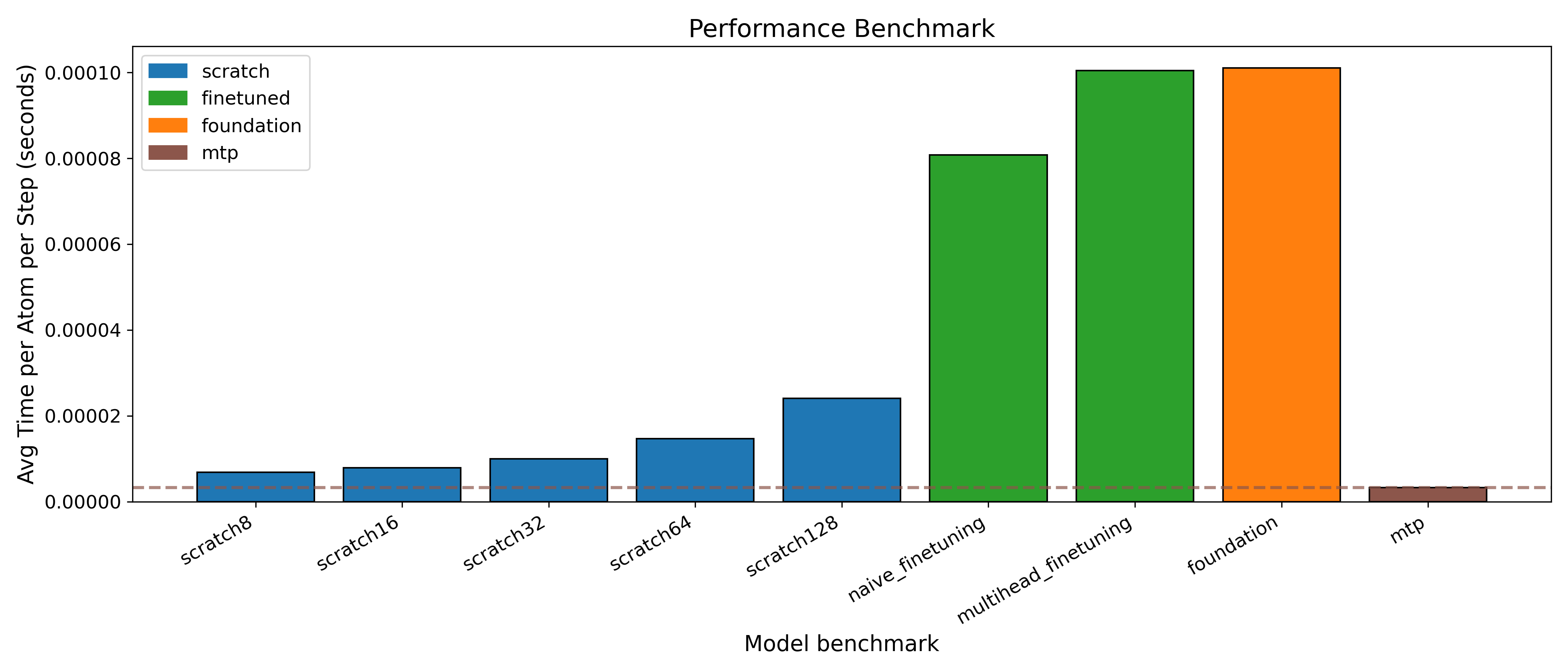

Models with higher numbers of channels (and in the case of the finetuned models which have 128

channels and a max_l_equivariance setting of 1 corresponding to a medium/”L1” level model)

achieve lower RMSE values for forces, indicating better

agreement with the reference DFT data. However, this improvement comes at the cost of increased

model size and slower inference, as also summarized in the performance benchmark plot where the

small models trained from scratch make predictions almost as fast as the MTP while the medium sized

finetuned and foundation models are orders of magnitude slower.

Focusing specifically on the two finetuned models, it is evident that the naively finetuned model

is slightly faster and about as accurate as the multihead finetuned model. The speed difference

comes down to the fact that the naive model only contains atom type encodings for the Ti and Si

atoms. Due to not being able to find 10000 configurations to replay training with from the original training data,

the multihead training included some additional atom types in the training data. Therefore, the

model contains more parameters to handle additional atom types which leads to a slightly

larger and slower model.

As evident from the forces RMSE plot, the finetuned models are likely to perform better

than models trained from scratch when trained only on a limited amount of data.

The more data included in the training, the higher the likelihood will be to train models from

scratch that can learn the underlying physical interactions as well as, or even better than, the

finetuned versions. For large data sets, it is often an option worth exploring since, if the

accuracy of the models can achieve the same levels as the finetuned models, it might just be

possible to train an accurate and small (max_l_equivariance=0) model that is faster than models

finetuned from foundation models. Due to the lower amount of training data needed and the

fact that the nature of the physical interactions, to a large degree, have already been learned

by the foundation models, finetuning is however often a more practical path to go to ensure

creation of accurate models if performance is not of critical importance.

Since this tutorial is mainly aimed at being an introduction as to how to train MACE models in various ways, no detailed parameter study has been carried out. Similarly, it has not been ensured that models have converged. In order to conduct training with a high probability of convergence to occur, see the General remarks section.

Impact of important parameters¶

The following table summarizes the impact of key parameters on model size, inference time, and accuracy when training from scratch as well as the pros and cons of finetuning.

Parameter / Model setting |

Model Size and Memory |

Inference Time |

Accuracy |

|---|---|---|---|

Increasing max_l_equivariance |

Higher values significantly increase model size. |

Increases (can be much slower for higher values). |

Generally higher, especially for complex systems. For simple systems, gains may be limited. |

Increasing distance_cutoff |

Increases the required amount of memory. |

Increases exponentially as the local environments include more neighbour atoms. |

Improves accuracy for systems with long-range interactions. For short-range systems, smaller cutoffs may suffice. |

Increasing number_of_channels |

More channels increase model size linearly. |

Increases, but less dramatically than max_l_equivariance. |

Higher number of channels generally improves accuracy, especially for forces. Diminishing returns for very high values. |

Finetuning foundation models |

Fixed to model architecture of foundation model used. |

Similar to foundation model used. |

Typically improves accuracy over training from scratch, especially with limited data. |

Based on these considerations, the following general recommendations stand if choosing to train a MACE model from scratch. Note that a requirement for reaching a good model is that sufficient amounts of diverse training data is available.

max_l_equivariance: Typically 0 or 1. Start with 0; optionally increase if accuracy is insufficient and sufficient training data is available.distance_cutoff: Typically 5–6 Å. Keep within a range of 4-7 Å.number_of_channels: Typically 64 or 128. Smaller numbers of channels can be tested for creating smaller models where speed-accuracy tradeoffs can be investigated.

There is no guarantee that training from scratch will yield a model that is better than finetuning a foundation model. If the training data is limited or not exhaustive enough, finetuning a foundation model is the better choice. If the training data is large and diverse enough, both approaches can be tested for the given use case. Under the right circumstances, a model trained from scratch might be able to achieve similar accuracy as a finetuned model while being smaller and faster. This is however not guaranteed and depends on the specific training data and application domain.

General remarks¶

The following remarks are general notes regarding training of MACE models and are not specific to the examples in this tutorial. They are included here for reference.

Restarting is by default activated in the MACE training. This means that if a training is interrupted, it can be resumed from the last checkpoint. It however has to be resubmitted in the same job folder - this is automatically the case when using the Job Tool and using the resubmit button for a job that has timed out, or when resubmitting the same submission script from the command line. Otherwise the training will start from the beginning again due to the lack of a checkpoints folder.

For general training of MACE models there is a rough rule of thumb for ensuring the training to be likely to converge. The number of gradient updates - given by

number_of_training_configurations * max_number_of_epochs / batch_size- should be on the order of 200000 to ensure a good likelihood of the training to converge. For small models, this number can be lower, while for larger models it may be higher but the general rule is good to keep in mind nonetheless. The exact number differs depending on the model architecture and the specific training but it offers a good starting point when training from scratch. For finetuning, the number of gradient updates required may be lower but as a starting point the rule of thumb can still be followed. For Multihead Finetuning, the replayed training configurations are also included in the number of training configurations for determining the number of gradient updates. Note that in any kind of training, setting thepatienceparameter to a reasonable value (relative to themax_number_of_epochs) can cut the training short, if the training seemingly has converged early. This is not required but can be a good practice to avoid unnecessary training time.In order to avoid using a lot of disk space and saving a lot of files that will not be used, it may be beneficial to not save all the checkpoints during the training as in the end only the best checkpoint from the training will be used to generate the final model. As has been done in all examples in this tutorial, this is achieved by deactivating the keep checkpoints parameter:

keep_checkpoints=False.As with any kind of machine learning model, during experimentation several train-validation or, if preferred, train-validation-test splits should be screened to ensure that the model is not overfitting to the training data and that the data splitting “hyperparameters” (

random_seed- both in MACEFittingParameters and in MachineLearnedForceFieldTrainer if a test set is created) are set meaningfully and optimized. The random seed in the MACEFittingParameters also controls the random initialization of the model weights, which can have a significant impact on the final model performance. Therefore, when training from scratch, it is recommended to run the training several times with different random seeds to ensure that different initial weights are attempted as part of the model training process.The most important hyperparameters to screen in general have all been introduced during this tutorial. For delicate applications other hyperparameters, as documented in the MACEFittingParameters, can also be tuned. However, this is mainly recommended for expert-users, as it requires more profound experience.

Loading custom MACE models into QuantumATK¶

As part of the validation scripts, it has already

been shown how to load a custom MACE model into QuantumATK in scripting.

Custom MACE models can also be loaded from within the Workflow Builder in Nanolab to

generate full workflows. Continuing with the multihead_finetuned model trained above,

a TiSi configuration is loaded from a database as a BulkConfiguration.

The “Set MachineLearnedForceFieldCalculator” block is then selected. In the Potential set type

dropdown menu, MACE_potential is selected. This updates the widget menu specifically to custom

MACE models. In the MACE filename field, the file with the model can be selected by clicking

the ... symbol. Note that the file to choose is the file with the .qatkpt extension. After

choosing the model file ensure to click the blue arrow in the left part of the filename field to

ensure that the file has been found and is compatible. If suitable for the desired simulation,

the Float precision can be changed to 64-bit, which is recommended, e.g. for accurate optimization,

dynamical matrix calculations, NEB, or transition state optimization.

This example will only set up a molecular dynamics simulation and the value is kept at 32-bit.

After this, the calculator is ready to use and the widget can be closed again.

A simple MolecularDynamics block can be set up to complete the workflow. Note that the script can run on either CPU alone or also include GPU. This is automatically handled at runtime, as described in the technical notes, depending on available resources and licenses. If the license configuration supports it, running with GPU enabled is recommended for MACE models to ensure efficient inference.

The Workflow outlined above is available for download: Trained_model_usage_example_with_MD.hdf5.

The workflow can be added manually to the Workflows folder within the current project and

thereafter opened in the workflow menu in QuantumATK. In order to remove the error shown in the

“Set MachineLearnedForceFieldCalculator” block, the path to

the custom MACE model must be updated in the MACE filename field. Alternatively, the workflow can be

acquired and set up in a separate QuantumATK project by downloading all the mentioned project files

in a zip folder, unpacking it, and opening the folder as a project in QuantumATK

with all trained models included. The zip folder can be downloaded here:

Tutorial_MACE_amorphous_and_crystal_structures_all_data.zip

Summary¶

In this tutorial, it has been shown how to train MACE models from scratch, finetune foundation models, and how validating trained models on unseen data can be approached. It has also shown how to load custom MACE models into QuantumATK and use them in workflows and scripts. The tutorial has provided a brief overview of the most influential MACE training parameters and how they affect the model size, inference time, and accuracy. Furthermore, strategies for how to approach training new models with custom data as well as things that should be taken into consideration when training models for custom applications have been discussed.

Compared to MTP, MACE models can provide an even better accuracy, especially for very complex systems with many atoms, at the expense of slower simulation speed.